Markov chains are used primarily in Natural Language Processing for part-of-speech tagging. Corpora are studied to establish the construction of sentences. This is a very powerful algorithm that can also be used to generate new material (words, text, et cetera). In this first post I will talk about generating words.

- How it works

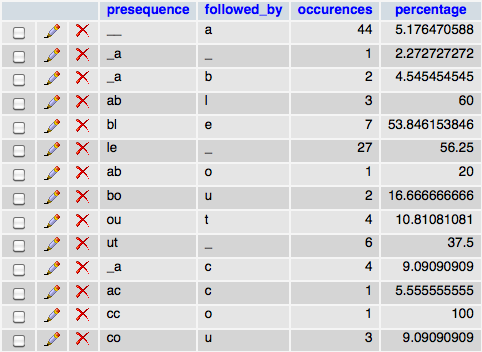

Given a corpus, letter patterns are studied at different depths. For depth one, the probability of a letter following another is established. For depth two the probability of a letter following a sequence of 2 letters is established. The same goes for greater depths. The result of all this studying is a table of probabilities defining the chances that letters follow given sequences of letters.

When the time comes to generate words, this table of probabilities is used. Say that we need to generate a word at depth 2, we seed the word with 2 null letters, then we look in the table for all the letters that can follow a sequence of 2 null letters and their associated probabilities. Their added probabilities will be 1 obviously. We generate a random number between 0 and 1 and use it to pick which following letter will be chosen. Let’s say that the letter “r” was chosen. Our generated word is now comprised of “null” and “r”. We now use this sequence as the basis for our next letter and look for the letters that can follow it. We keep going until an null letter is reached, signifying the end of the generated word.

Here’s a sample of a probability table:

- Benefits of this algorithm

It will generate words that do not exist but respect the essence of the corpus it’s based on. This is really cool for example to generate words that sound English but aren’t (say for random passwords that can be pronounced/remembered). We could also make a list of all the cool words (motorcycle, sunglasses, racing, et cetera) and extract their essence to generate maybe a product name that is based on coolness :).

Go ahead and play with it: