Markov chains are used primarily in Natural Language Processing for part-of-speech tagging. Corpora are studied to establish the construction of sentences. This is a very powerful algorithm that can also be used to generate new material (words, text, et cetera). In this first post I will talk about generating words.

- How it works

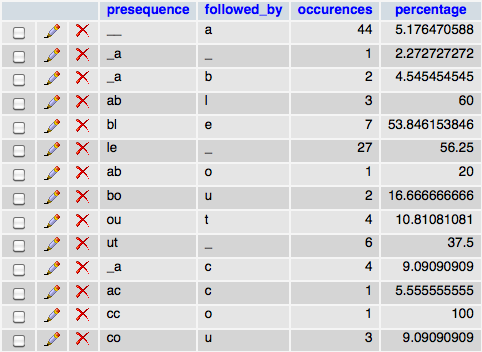

Given a corpus, letter patterns are studied at different depths. For depth one, the probability of a letter following another is established. For depth two the probability of a letter following a sequence of 2 letters is established. The same goes for greater depths. The result of all this studying is a table of probabilities defining the chances that letters follow given sequences of letters.

When the time comes to generate words, this table of probabilities is used. Say that we need to generate a word at depth 2, we seed the word with 2 null letters, then we look in the table for all the letters that can follow a sequence of 2 null letters and their associated probabilities. Their added probabilities will be 1 obviously. We generate a random number between 0 and 1 and use it to pick which following letter will be chosen. Let’s say that the letter “r” was chosen. Our generated word is now comprised of “null” and “r”. We now use this sequence as the basis for our next letter and look for the letters that can follow it. We keep going until an null letter is reached, signifying the end of the generated word.

Here’s a sample of a probability table:

- Benefits of this algorithm

It will generate words that do not exist but respect the essence of the corpus it’s based on. This is really cool for example to generate words that sound English but aren’t (say for random passwords that can be pronounced/remembered). We could also make a list of all the cool words (motorcycle, sunglasses, racing, et cetera) and extract their essence to generate maybe a product name that is based on coolness :).

Go ahead and play with it:

While this is fine, it’s actually inferior in practice to the very reasonable process of breaking the words up at the vowel group, consonant group level. If you rather than the letters go by the vowel clusters it’s far superior. Link, not individual letters but rather individual sounds, though really we use vowel-clusters as they are basically the same thing. So “Hello” gets ^-H-e, e-ll-o, o-$ links as each group of consecutive vowels or consonants is 1 link in the chain. Typically C-V, or V-C-V or V-C word parts.

That makes a lot of sense, seems like you’re talking about something very close to phonemes. There are many ways to slice and dice data to be fed to Markov models. You’ll see on this blog I have sentences and music as well. I’ve been thinking about applying it to many other things, not necessarily language based. 2D images, binary data, the sky is the limit 🙂

Thanks for the feedback !

Hello Ben,

Yes I understand markov concept, all of it is based about a transition matrix. This matrix is builded using probabilities and after you generates an output based on it.

I can really program it, there is not problem. But if I found source code from domain public I think it is better because it will be tested and bug free. The time is gold (-;

I am very much interested with this algorithm because I would like to apply it to random music generation. It is possible to get the source code. I will translate it to C++.

Thanks in advance.

You might be interested in the following then: http://ben.akrin.com/?p=2433

🙂

This algorithm can be used for a lot of things not just generation. I’ve generated words, texts & music with it. I’ve always wanted to see what images would look like but never got the time to work on it. At the end of the day I think it can be used for much more than that. Since it extracts the numerical essence of something I think it could be used in a unified and generic way to identify data. Meaning given a random sequence of bytes, are they arranged like language? like machine code? like encrypted data? like encrypted language? et cetera.

I’m happy to share some code with you but it’s a very simple algorithm in concept, I think you would spend more time trying to read my code than come up with your own. Of course your data structure is key, I use PHP & MySQL because it’s very easy to write, adjust and hold data. My first iteration was in C. I may even still have it somewhere…

Do you understand the concept of Markov chains to begin with?