Here is the most basic information to build closed organ pipes that sound decent. The information I found online was overwhelming and hard to distill to the essential variables for a beginner. All the following variable names are in bold for reference against the diapason. This diapason is for a standard 29 notes organ.

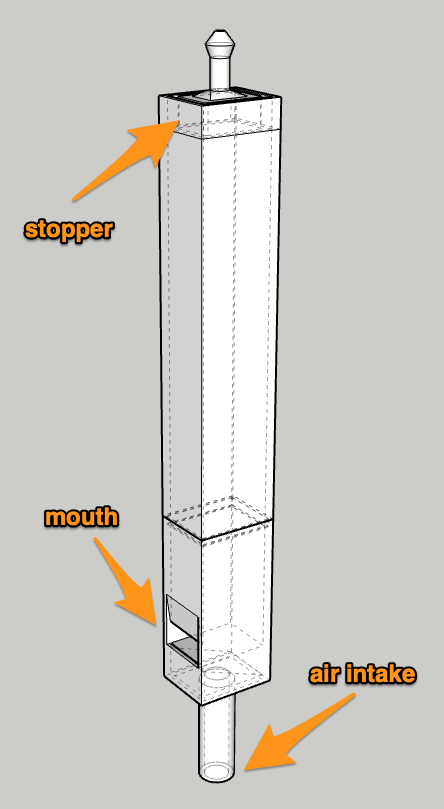

Here’s an organ pipe and its main parts. The stopper can go up and down to tune it to the exact frequency.

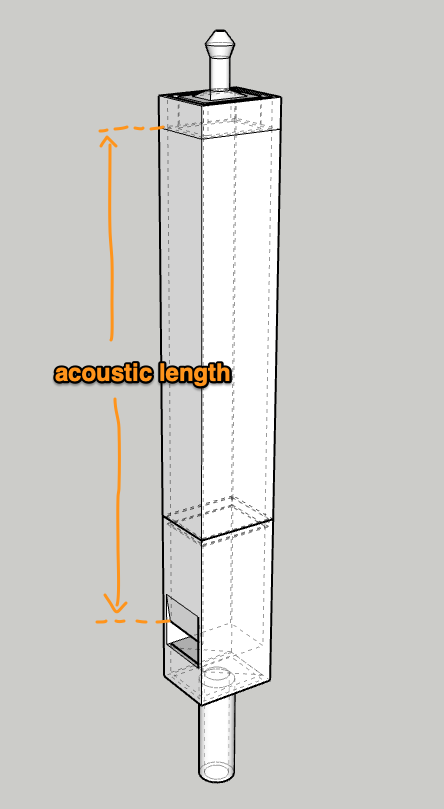

The frequency of the sound it’ll make depends on the length between the lip (top of the mouth) to the bottom of the stopper. This length is called the acoustic length.

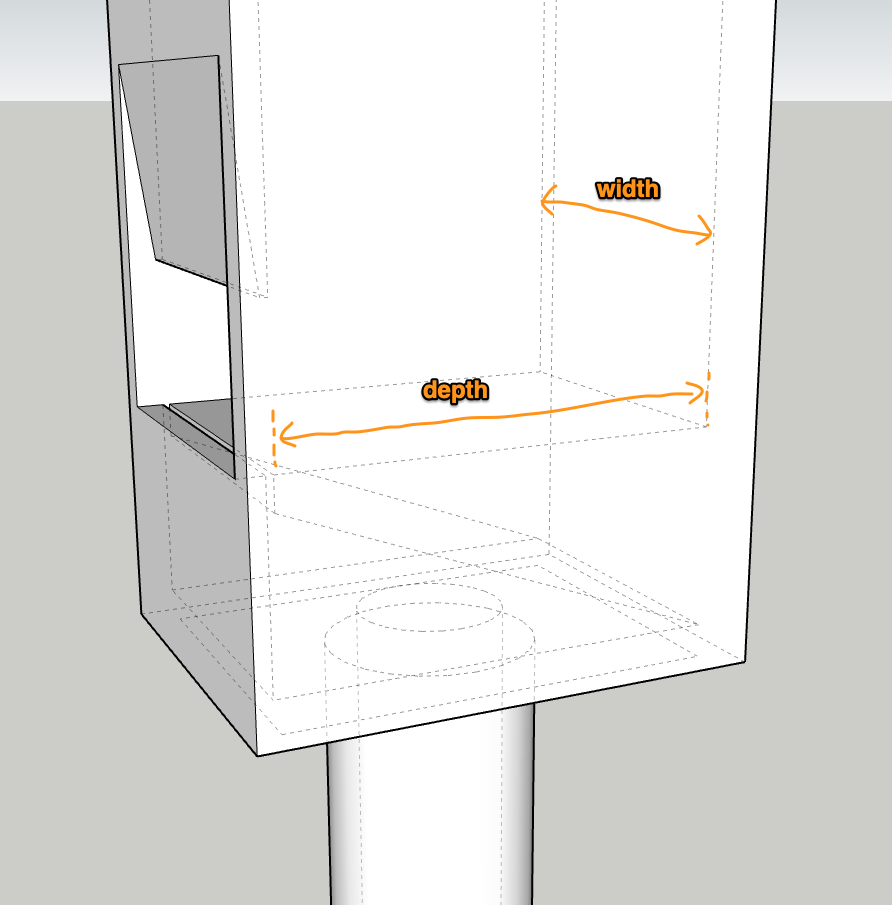

In reality, it’s not just this length that is at play, the volume of the pipe comes into the equation. The inside width and depth are relevant.

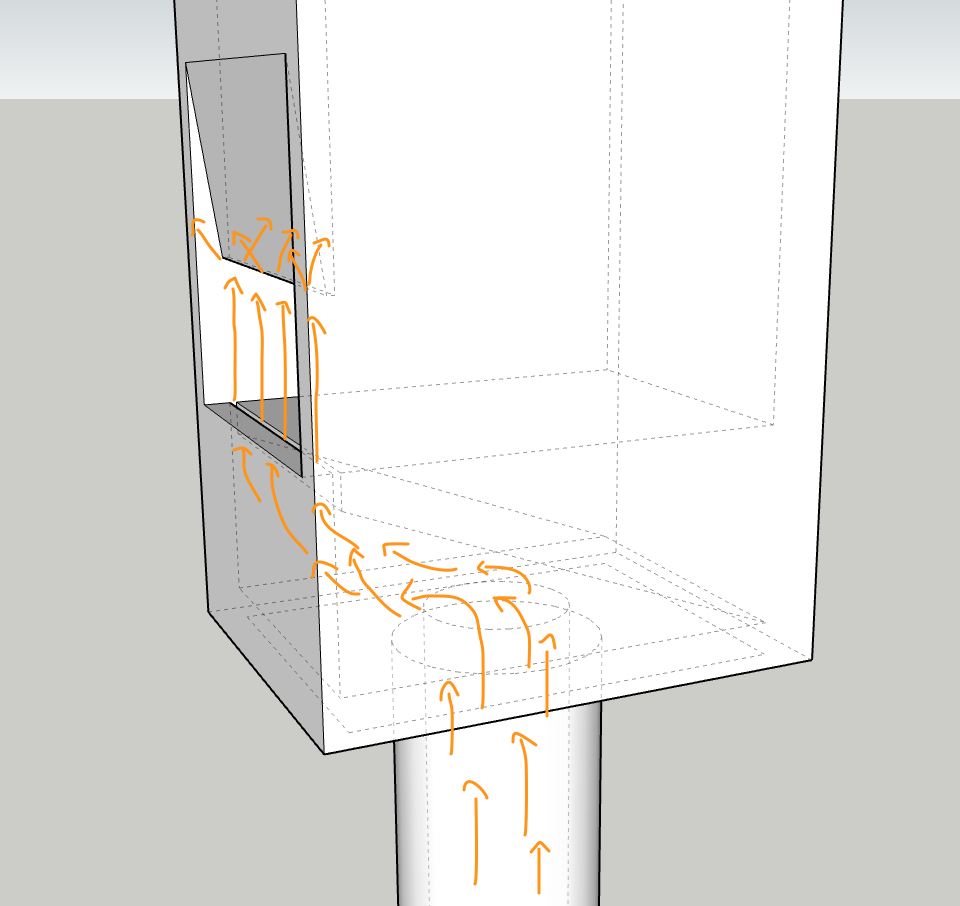

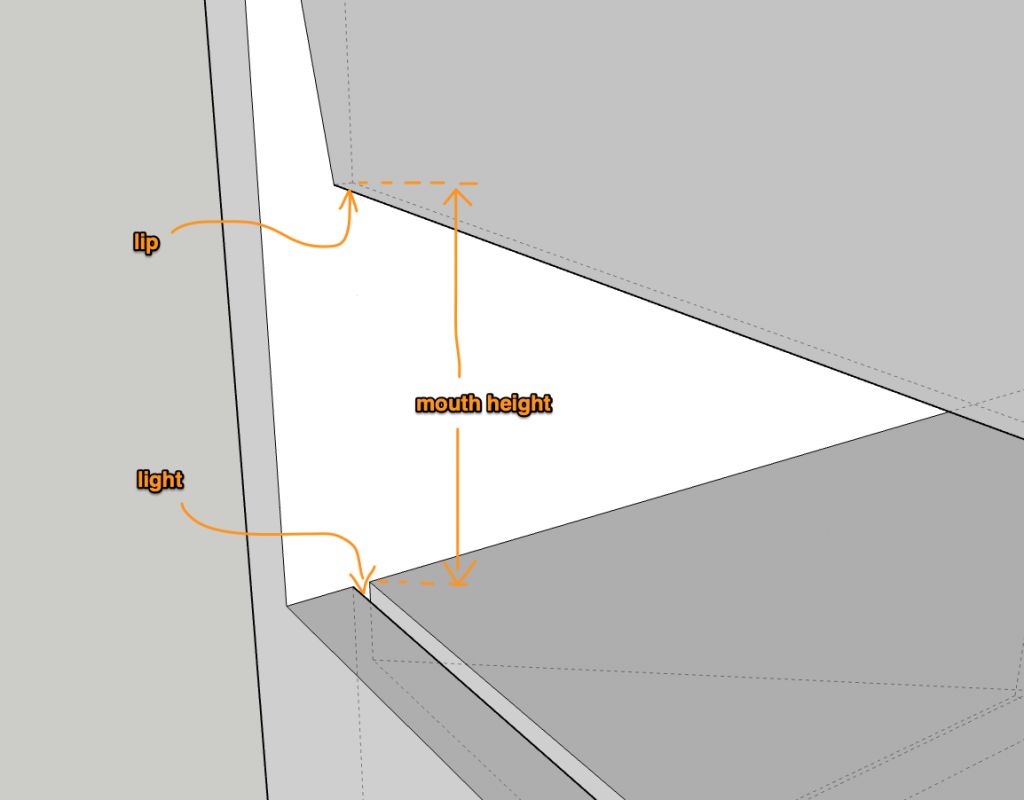

Of course a lot of the action is at the mouth. This is where the air gets split by the lip.

When the lip splits the air, the air stream oscillates back and forth between the sides of the lip which is the vibration causing the sound.

Zooming in on the mouth, we find a few more variables which matter to how well a pipe will perform. The mouth height and the “light“. A bigger pipe will need more air to get through and a higher mouth. The light is the size of the opening the air is allowed out of to go hit the lip. There might be a better name for it in English, but I don’t know what it is and I like the French name :). The lip and the light are the same size and perfectly aligned.

Reference: Philippe’s incredibly detailed blog on organ making, and many other odd websites. The diapason is his, cross-referenced with another, distilled to the essential variables, and with units standardized to millimeters. Thanks in particular to Philippe for his generosity in making information available and answering questions.