Disclaimer: I and other people may or may not have had anything to do with figuring this out.

Zoom Rooms emit ultrasounds to let devices within “earshot” connect without the user having to type anything. Ultrasounds have been used for such proximity related convenience before, and sometimes for more nefarious purposes such are mapping out who’s next to each other in the world. All using a wireless network that is available anywhere and completely ad-hoc. It just doesn’t go very far (thankfully).

In this post we’ll see how to analyze such a signal using Zoom Rooms’ Share Screen signal as an example.

Harvesting the Sound

First things first, to decipher the signal contained we need to extract the bits from a fuller audio landscape containing “noise” throughout various frequencies. Ultrasonic frequencies, the ones the human ear can’t hear, are by definition above 20KHz. Now this fluctuates between individuals, and especially age groups 🙂 but that’s the general cut-off: anything above 20KHz is unhearable, thus usable to transmit hidden signals. Although microphone and speaker manufacturers have no incentive to build products in the non-human range, humans are the one giving them money after all. And so hidden signals tend to be right around the 20KHz cutoff, where human-centered manufacturing specs will have a good chance of still working.

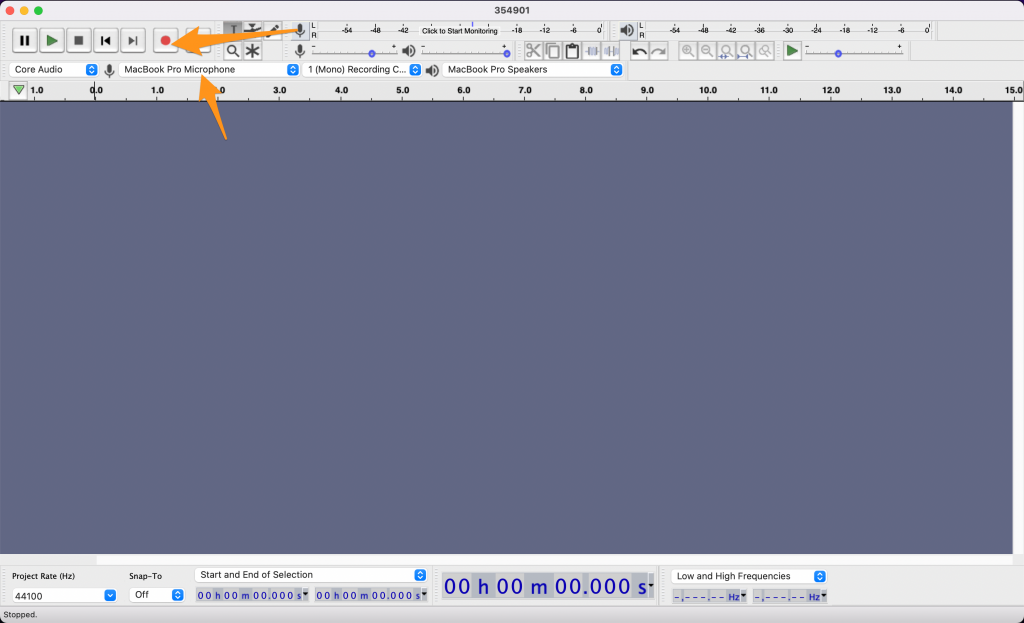

Audacity is great for recording and analysis, this is what we’ll use here. First record your sample, using your laptop, get close to the source of the ultra sounds, try and keep things quiet during recording, and gather a good sample.

Extracting the Signal

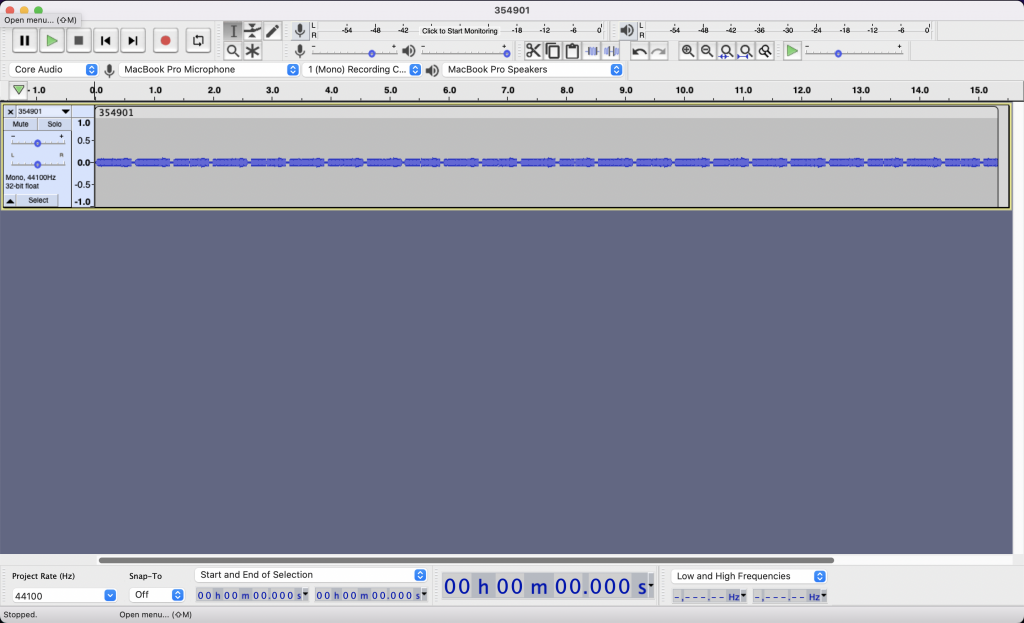

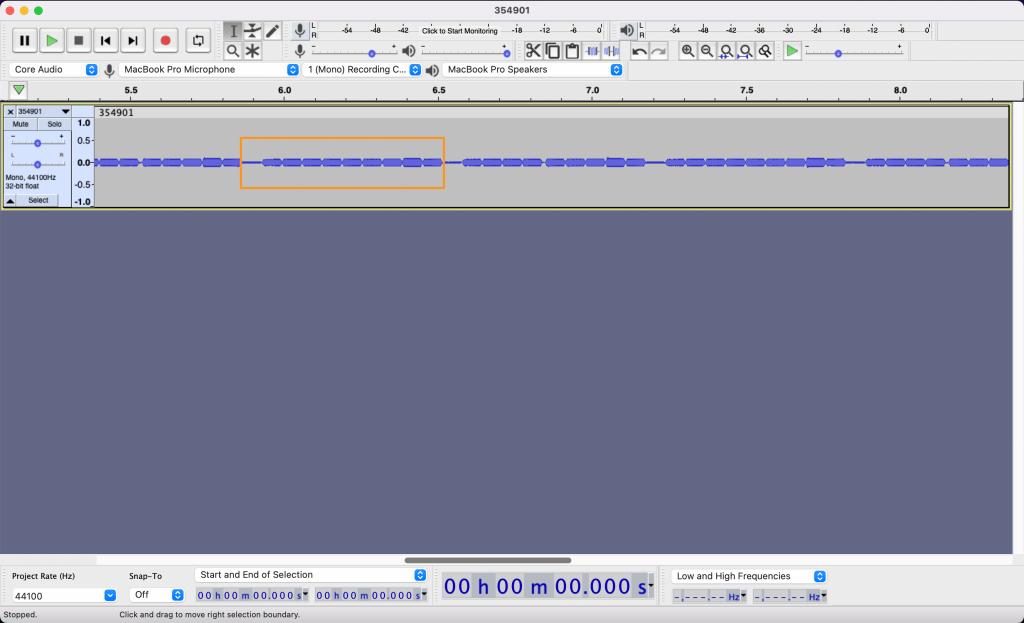

Looking at various time zoom levels, we can home in on a repeating pattern. The room is complete silence but your computer does hear it loud and clear.

The complete silence as it was recorded. Note the time scale just above the recording.

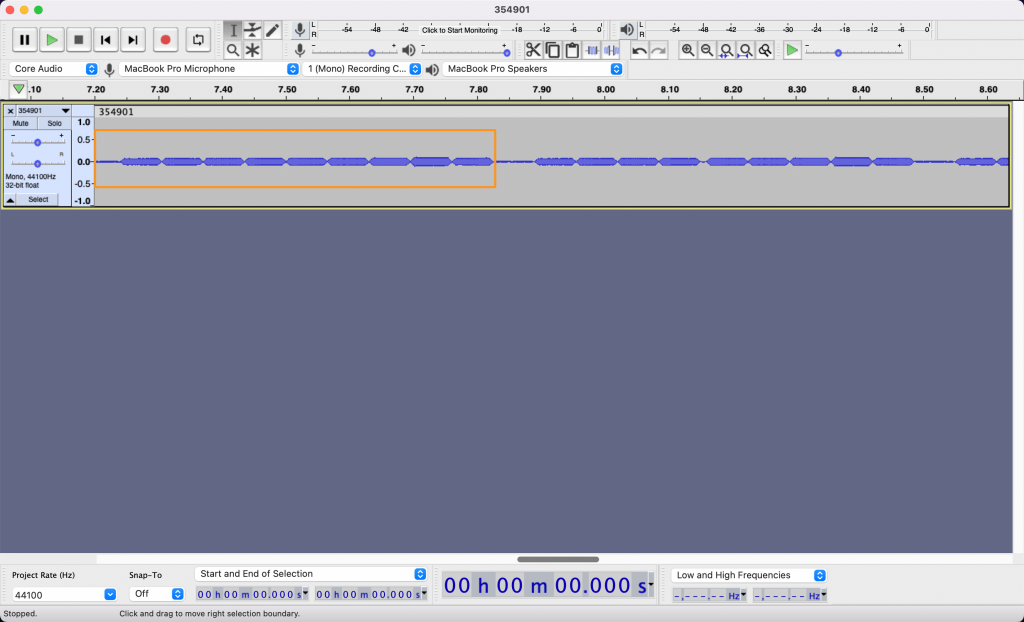

Zooming in on the pattern, each of the pattern’s blob is made of several blobs. At this level we can guess that the signal is made of 10 notes. Zoom Room’s Share Screen codes are 6 digits long so it feels right, either for control characters, or because they planned on room for expansion. Now we don’t know really know when the signal ends and when it stops, I’m drawing the rectangle for 10 notes starting on the quiet one, because I could see a silence being used as a separator much like a space or a line return.

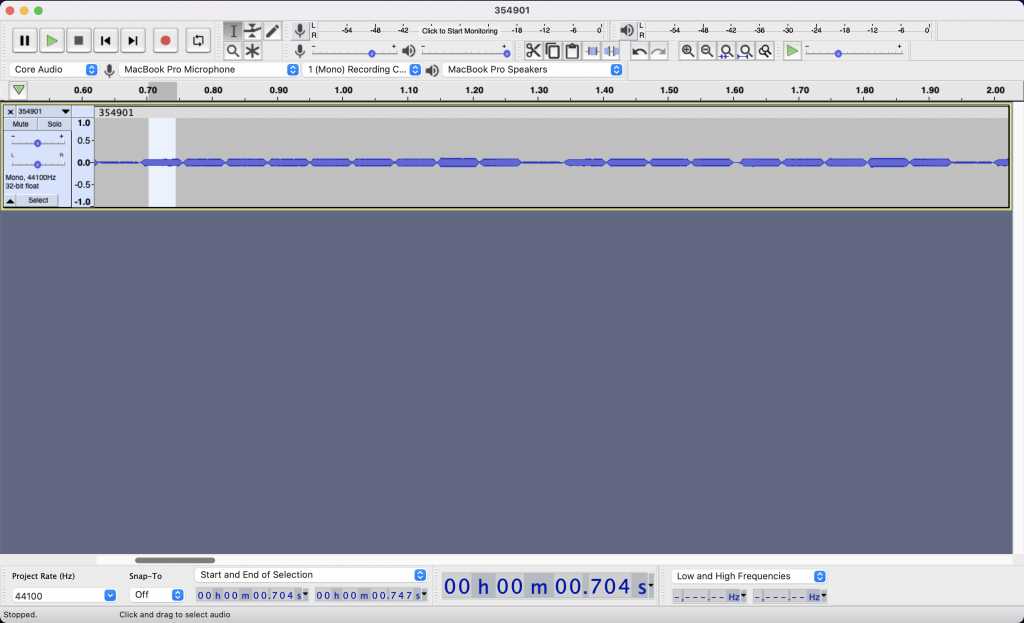

Finally, we zoom in just enough to start poking at each individual note/character.

Pretty easy so far, all we’ve done is record and zoom and we can start seeing our signal. Now begins the tedious task of annotating as many samples of this signal as you can. This is the data that will let you decipher the code.

Select a clean section of just one note, don’t grab the edges, just the meat.

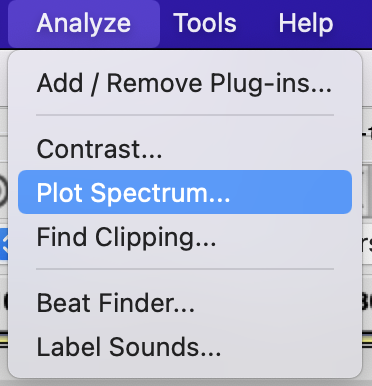

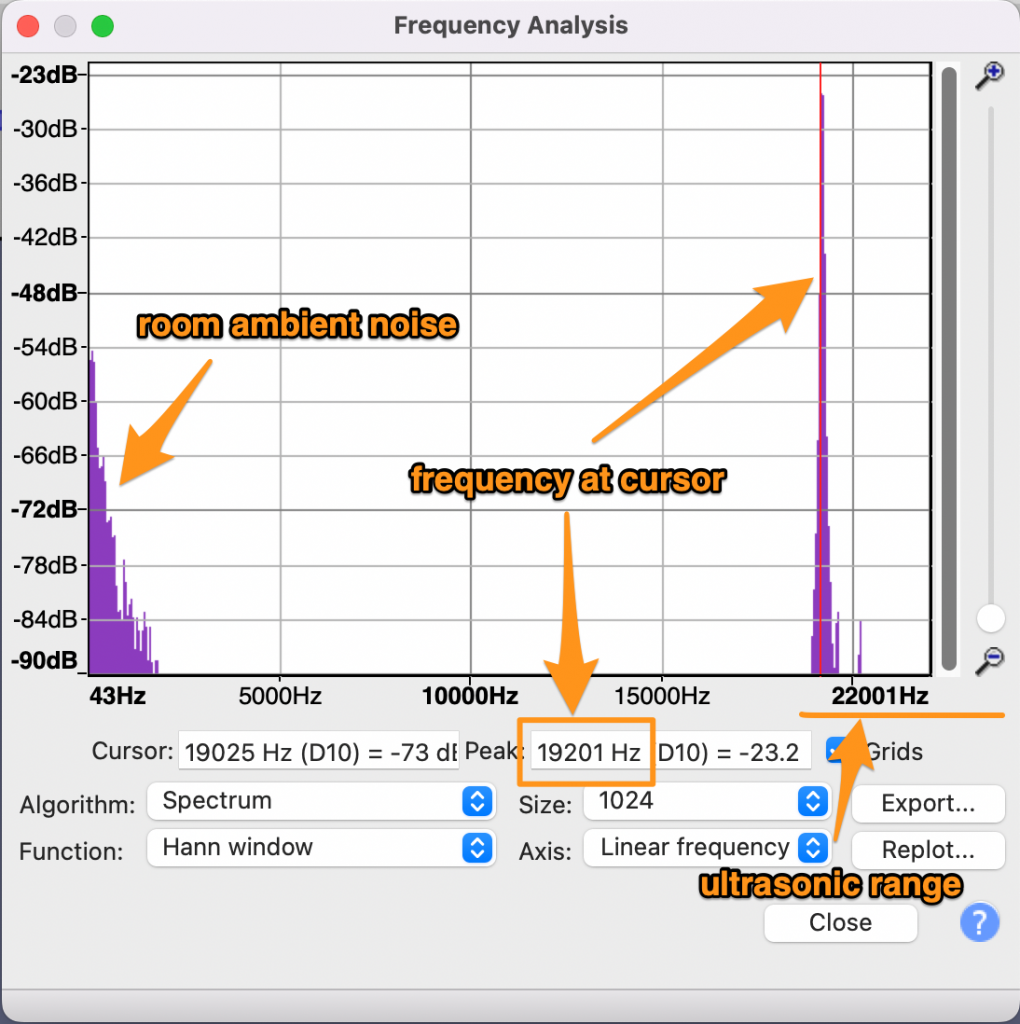

Then Click on Analyze -> Plot Spectrum

This will do a Fourier Transform of the selected area to decompose the sound into all of it various frequencies

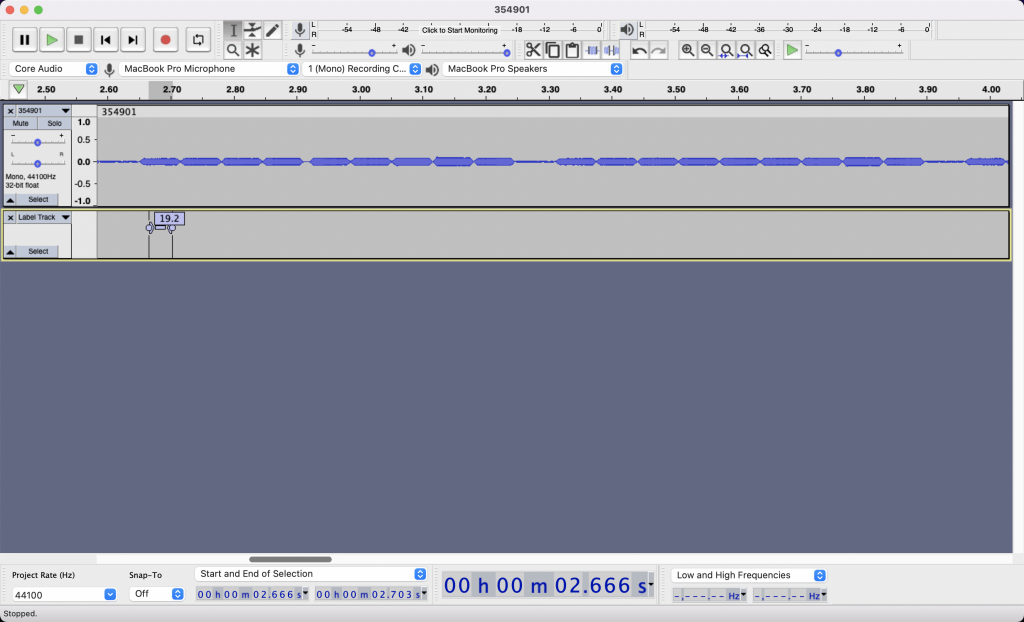

Place the cursor on the highest ultrasonic peak, and read the frequency: 19201Hz or 19.2KHz here.

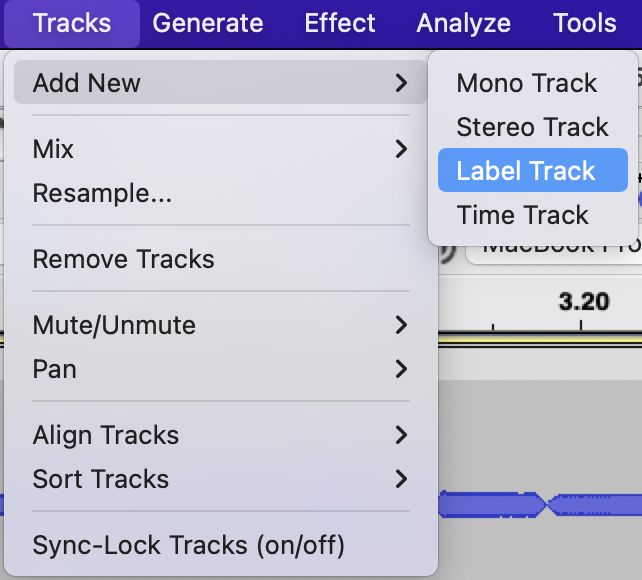

Make note of it by adding a label at the selection. You’ll first need a label track if you don’t already have one.

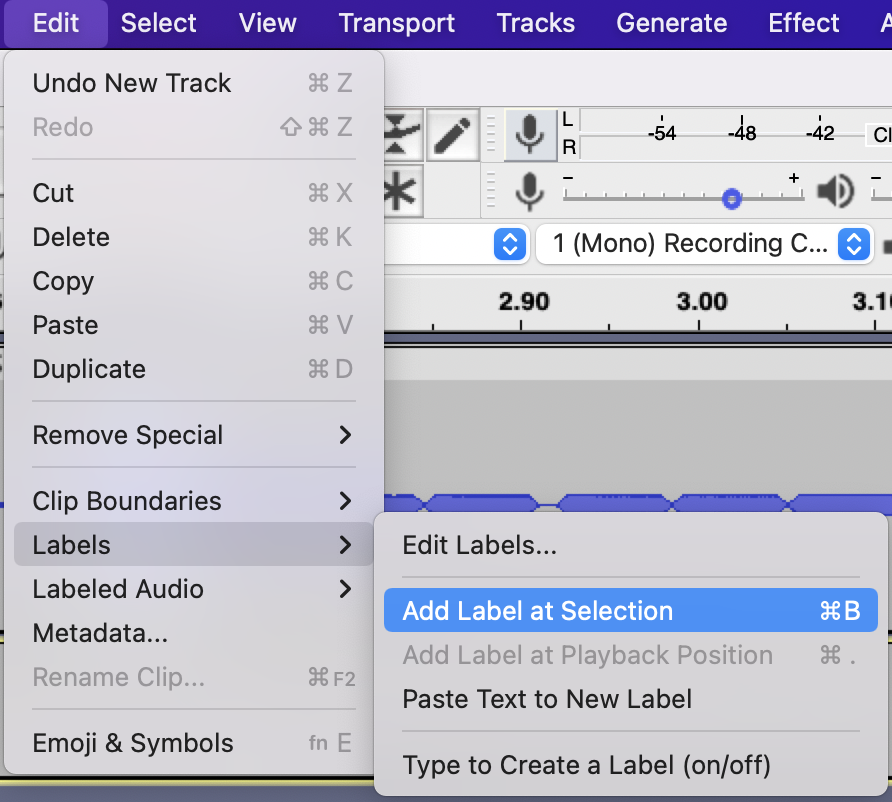

Then you can add the label.

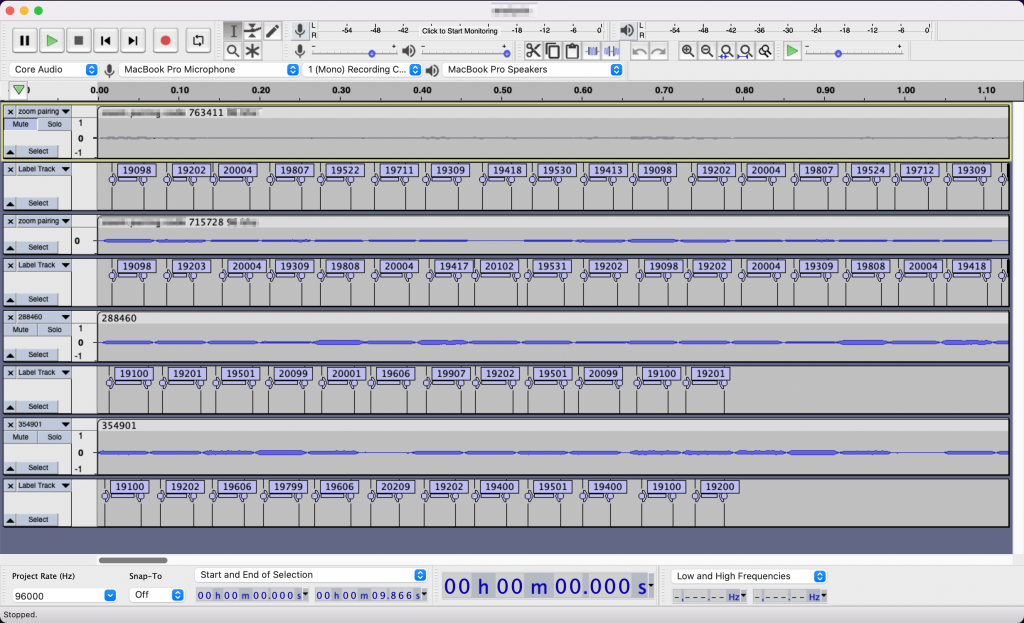

Do this for each note/character in the signal, it’s worth confirming the 10 character repetition we think we’re seeing. Then do this for many more samples… The more data, the easier it’ll be to decipher. Your project, which you should save often, will look something like that:

Deciphering the Signal

Now, obviously depending on what signal you are studying, the encoding will differ. I’m only talking about Zoom Rooms here and so I’ll only give general advice followed by the Zoom algorithm.

The general advice is as follows:

1. Gather a lot of data, this is the non-exciting part so it’s easy to want to move past it.

2. More often than not, there will be control notes/characters indicating the beginning or the end, or both of the signal. In the screenshot above, 19.1Khz followed by 19.2Khz is looking very likely like a control, align your audio sample to it and focus on the remaining notes/characters.

3. Look at the data from different angles, visually write it differently to see if patterns emerge. Spreadsheets can help.

4. Try various scenarios, even if you know they are likely false, they might get you closer to the truth.

5. Occam’s razor (or the lazy programmer) is likely a good guess

Zoom Room Rosetta Stone

Each signal starts with 19.1Khz followed by 19.2KHz. Then the 6 digits of the code displayed on the screen is “played”. Then a 2 digits representing the checksum of the 6 digits which is their sum. That’s 10 characters total.

Each digit maps to 2 possible frequencies:

0 -> 19.2Khz / 19.3Khz

1 -> 19.3Khz / 19.4Khz

2 -> 19.4Khz / 19.5Khz

3 -> 19.5Khz / 19.6Khz

4 -> 19.6Khz / 19.7Khz

5 -> 19.7Khz / 19.8Khz

6 -> 19.8Khz / 19.9Khz

7 -> 19.9Khz / 20.0Khz

8 -> 20.0Khz / 20.1Khz

9 -> 21.1Khz / 20.2Khz

Weird how they can possibly overlap and this is the twist behind this encoding, all other things being rather straightforward, for your next digit you always pick the frequency furthest from the frequency you just played. If you just played your control signal: 19.1Khz, 19.2Khz and your code starts with a 3 you will pick 19.6KHz to play the 3 as it it furthest from 19.2Khz. If your next digit is a 2, you will pick 19.4Khz to play it as it is furthest from 19.6Khz. I don’t know enough about sound engineering to know if Zoom did this to disambiguate frequencies which are close to each other, or if it’s meant as a cipher. I’m guessing the former, it seems to be a smart way to guarantee at least 0.2Khz of difference between 2 proximate characters while only adding 0.2Khz of spectrum. Since we know devices are likely to become distorted at the beginning of the inaudible range, it makes sense to both make an extra effort to distinguish characters, while not expanding too far into that range. Pretty cool eh?

Here’s a real world example, say that the code played is 790155:

first you play the control: 19.1Khz, 19.2Khz

then 7 with 20.0Khz as it is the furthest from 19.2Khz

then 9 with 20.2Khz as it is the furthest from 20.0Khz

then 0 with 19.2Khz as it is the furthest from 20.2Khz

then 1 with 19.4Khz as it is the furthest from 19.2Khz

then 5 with 19.8Khz as it is the furthest from 19.4Khz

then 5 with 19.7Khz as it is the furthest from 19.8Khz

compute your checksum of 7+9+0+1+5+5 = 27

play 2 with 19.4Khz as it is the furthest from 19.7Khz

then 7 with 20.0Khz as it is the furthest from 19.4Khz

Voila!

Some Code to go along with it

If you want to play the Share Screen code from your Zoom Rooms into the world, the following code will do it for your for a few seconds. Just make sure to update the “code” variable near the top. This code works in your standard browser’s web inspector console.

(async function main () {

var code = "<6_digit_code_goes_here>" ;

var context = new AudioContext() ;

var o = context.createOscillator() ;

o.type = "sine" ;

var g = context.createGain() ;

o.connect( g ) ;

o.frequency.value = 0 ;

g.connect( context.destination ) ;

o.start( 0 ) ;

var sleep_time_ms = 50 ;

var control_frequency = 19100

var frequencies = {

0:[19200,19300],

1:[19300,19400],

2:[19400,19500],

3:[19500,19600],

4:[19600,19700],

5:[19700,19800],

6:[19800,19900],

7:[19900,20000],

8:[20000,20100],

9:[20100,20200],

}

console.log( "starting ultrasound emission" ) ;

var i = 50 ;

while( i>0 ) {

i-- ;

// control

o.frequency.value = 19100

await new Promise( r => setTimeout(r, sleep_time_ms) ) ;

o.frequency.value = 19200

await new Promise( r => setTimeout(r, sleep_time_ms) ) ;

// payload

var checksum = 0 ;

last_frequency = o.frequency.value ;

for( var j=0 ; j<code.length ; j++ ) {

checksum += parseInt( code[j] ) ;

o.frequency.value = pick_furthest_frequency( last_frequency, frequencies[parseInt(code[j])] ) ;

last_frequency = o.frequency.value ;

await new Promise( r => setTimeout(r, sleep_time_ms) ) ;

}

// checksum

checksum = checksum.toString() ;

if( checksum.length==1 ) {

checksum = "0" + checksum ;

}

o.frequency.value = pick_furthest_frequency( last_frequency, frequencies[parseInt(checksum[0])] ) ;

last_frequency = o.frequency.value ;

await new Promise( r => setTimeout(r, sleep_time_ms) ) ;

o.frequency.value = pick_furthest_frequency( last_frequency, frequencies[parseInt(checksum[1])] ) ;

last_frequency = o.frequency.value ;

await new Promise( r => setTimeout(r, sleep_time_ms) ) ;

}

console.log( "stopped ultrasound emission" ) ;

g.gain.exponentialRampToValueAtTime( 0.00001, context.currentTime + 0.04 ) ;

function pick_furthest_frequency( previous, possible_new_frequencies ) {

if( Math.abs(last_frequency-possible_new_frequencies[0]) > Math.abs(last_frequency-possible_new_frequencies[1]) ) {

return possible_new_frequencies[0] ;

}

return possible_new_frequencies[1] ;

}

})();