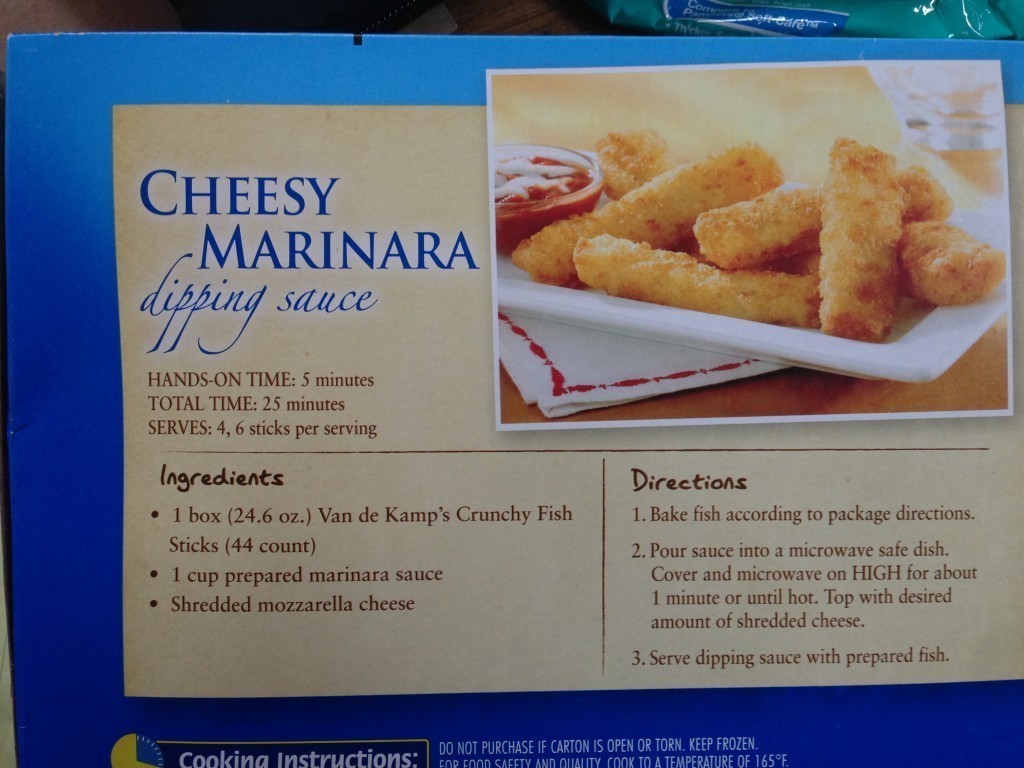

Recipe for Cheesy Marinara dipping sauce,

Recipe for Cheesy Marinara dipping sauce,

- acquire some prepared marinara sauce

- add cheese

- high-five yourself

[flv:http://ben.akrin.com/wp-content/uploads/2013/04/chicks01.flv 640 426]

[flv:http://ben.akrin.com/wp-content/uploads/2013/04/chicks02.flv 640 426]