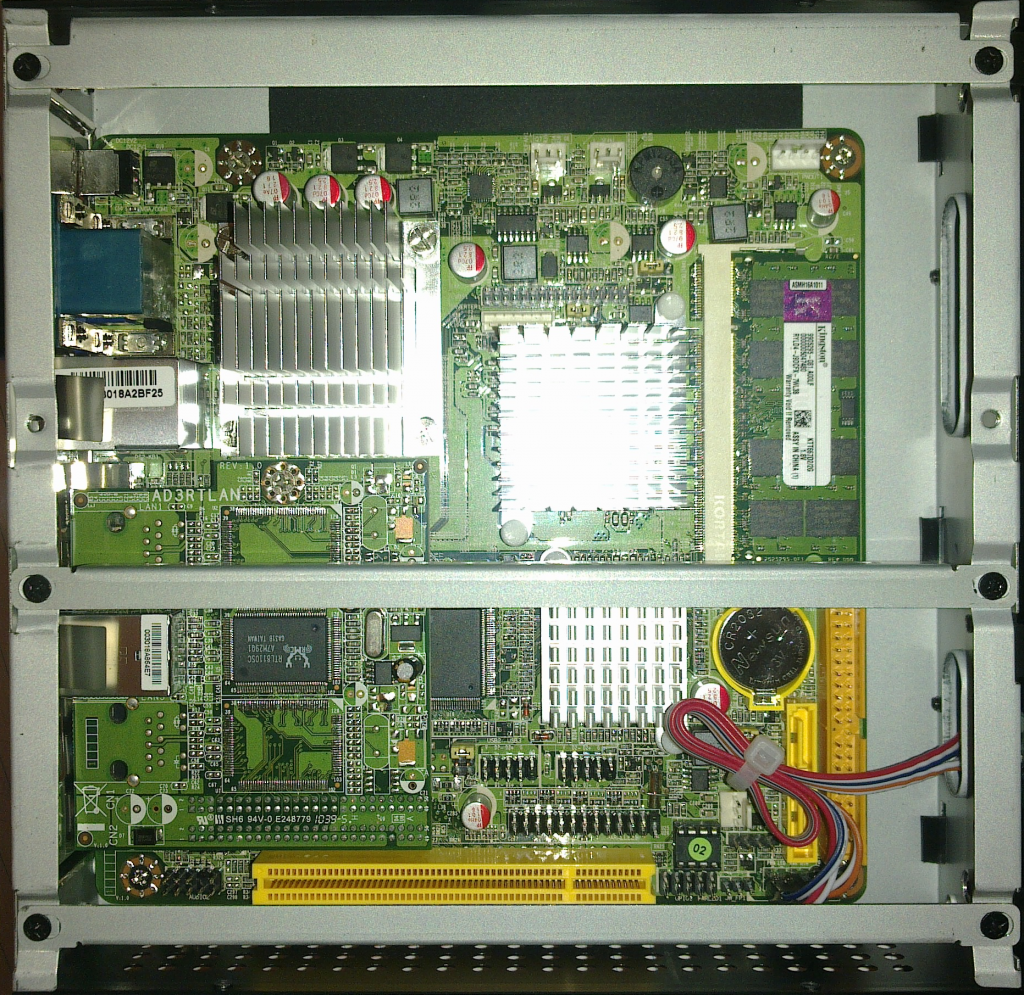

The hardware showed up! So I get busy installing the RAM and the SSD. Habey in all its generosity included a SATA data cable with its barebone server. This is cool I guess, I mean I already have a bunch and hard disks always have cables but I’ll take it.

I proceed to start hooking the SSD when I realize that there are no SATA power slots anywhere.

Do you see anything?

The problem is that apparently I’m the only person who ever bought one of these systems. There is literally no information available on any site (including www.habeyusa.com) on how to power your hard drives. Even though it has an IDE slot, there is no 4 pin Molex power available either, so no luck hijacking one of these for the SATA SSD.

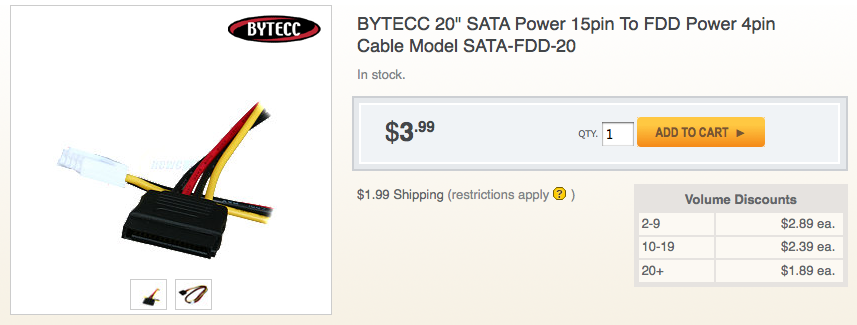

After careful examination of the motherboard, there is one slot that’s labeled “POWOUT1”. It’s a slot whose shape I haven’t seen for ages. I hope you’re sitting as you’re about to read this: it is shaped for 3.5″ floppy disk drive power. And that’s the only power that seems tap-able for hard drives. Much research on the web yields many 4 pin Molex to SATA cable converters. Eventually some Floppy power to to 4 pin Molex. Ultimately I found just the cable I needed.

You’re reading right; SATA Power 15pin to FDD (as in Floppy Disk Drive) power 4 pin…

Habey thought to include a standard SATA data cable but not their weird ass power equivalent. And it you look carefully, SATA power cables have 5 cables, the picture above has only 4. The 3 Volts cable has just been gotten rid of. Doesn’t this affect functionality?

Well fuck everything, I’m not waiting 5 more days for a silly cable. Thankfully we have a master hardware tinkerer at work, and after verifying the voltage of the slots on the motherboard (to verify that it was indeed FDD power), we cannibalized a couple of old power supplies to come up with a Frankenstein cable.

TADAAAAAA!!

And it works perfectly. Seriously Habey: better labeling, a motherboard manual (online or paper) or a weird ass cable included would have been nice.

Tomorrow we’ll stress test the box and it’d better take the beating without crashing.

Thanks to playtool.com for their very helpful resource.